Is your company using AI ethically? 4 ways to check

Learn what responsible AI usage looks like—from experts at Salesforce, Telefonica, and Fox Rothschild

Rapid advances in AI come with plenty of opportunities—and plenty of ethical considerations. While tools like generative AI can streamline everything from writing an email to creating an image, that doesn’t mean that all use cases for these systems are ethical.

As AI-powered tools become more widely used, concerns about bias and privacy are rising to the top of leaders’ radars. Even Sam Altman, the CEO of the artificial intelligence research laboratory that created ChatGPT, is calling on lawmakers to begin regulating how these systems are used.

While we don’t have legislation that outlines what responsible AI usage looks like just yet, there are a handful of experts guiding us toward more ethical ways of leveraging these systems. We sat down with three of them to explore AI’s latest advancements and discuss how companies can take advantage of these innovations without compromising employees’ privacy or creating bias.

The state of ethical AI: rapid advancements raise new questions

Within two months of launching, ChatGPT amassed more than 100 million users—and its adoption has only continued to skyrocket in the weeks that followed. A new report reveals finds that nine out of ten workers at higher levels in their organizations now use generative AI tools. However, most experts agree that the guidelines for using these technologies aren’t keeping up with their rapid proliferation.

As Paula Goldman, Chief Ethical and Humane Officer at Salesforce, explains, “It feels like every week is a year or a decade in terms of news, and keeping up with the implications of those advancements is really important. There are still a number of issues that aren’t new but are just accelerated. Like thinking about bias in models or thinking about toxicity and the content that’s created.”

While it’s evident we still have a long way to go when it comes to developing frameworks for ethical AI usage, Goldman is encouraged by society’s growing interest in understanding how these innovations work. “The thing that makes me happy is that these questions are really part of the public dialogue. People are increasingly fascinated with these technologies. And that’s what we need, we need an all-hands-on-deck solution with guardrails,” she says.

The business case for ethical AI usage

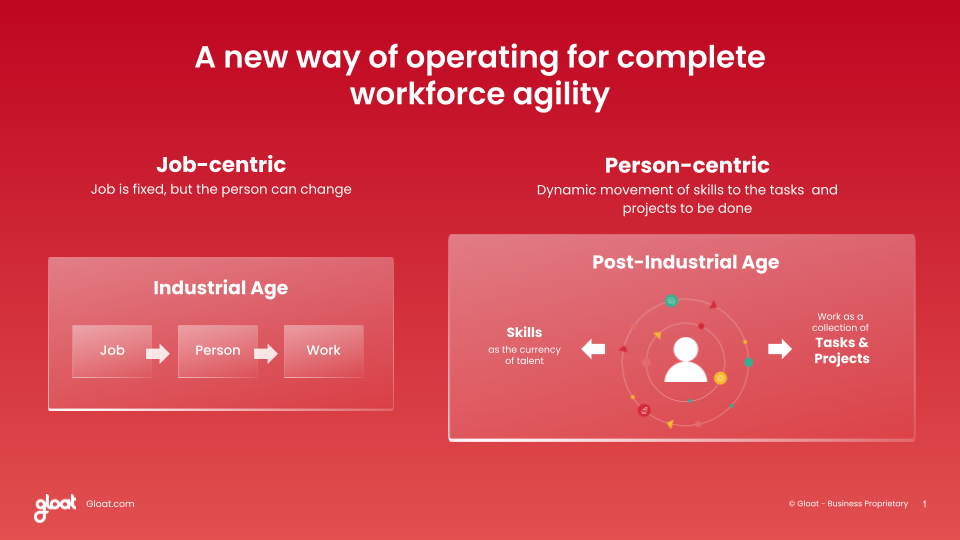

Beyond being the right thing to do, prioritizing responsible AI usage is also a smart business decision. Companies that use talent management tools powered by ethical AI often uncover internal talent that may have once been overlooked, enabling hiring managers to fully capitalize on all the skills their workforce has to offer. These systems can also generate recommended career paths for employees and suggest opportunities to help bridge existing skill gaps, in turn encouraging workers to take their professional progression to the next level.

However, companies will only gain long-term benefits from these systems if leaders ensure that the data they’re running on is being collected responsibly—especially with the potential for new legislation on the horizon. Odia Kagan, Partner and Chair of GDPR Compliance and International Privacy at Fox Rothschild, discusses the risks that organizations face when it comes to data privacy, noting, “We’ve seen it with data protection. You could be too big to fail and too big to find, but the other consequences like injunctions or requirements to delete data that was collected and processed illegally are a big deal because you need that data right?”.

4 checks to put in place to drive ethical and responsible AI usage

There are a few steps leaders can take to ensure their organizations are using AI responsibly. Best practices include:

#1. Ask about models and data sheets

Leaders shouldn’t shy away from asking about the models that are powering various AI systems they’re considering. In fact, Goldman encourages executives to engage in a dialogue with potential vendors. “You need to ask, is there a model or is there a data sheet? What’s going into these models? What are the standards that are associated with these models?” she suggests.

Goldman is optimistic that these conversations will lead to the creation of a set of guidelines leaders can use to evaluate future AI systems. “What’s exciting is that there’s momentum and convergence toward thinking not quite about standardization but convergence towards sets of metrics that we can compare across different products,” she explains.

#2. Test the data that’s going into these systems

Executives also need to have an idea of what goes into the implementation process for any AI system they’re considering, according to Goldman. “You need to consider the safeguards that are up in terms of testing. AI is only as good as the data that goes into it. So make sure that when it is implemented with one’s own data, first test the data that’s going into it, continue tests around the fairness of the models themselves, and then obviously the control about what data goes in and goes out,” she says.

#3. Keep the people who will be using these systems top of mind

As the people who will be using the technology directly, leaders must prioritize their employees’ needs and potential use cases. Every decision should be made with them in mind, and that means considering how these technologies will be received and the potential indirect consequences associated with launching them.

“Some consequences are indirect, but they have a big impact,” explains Chief AI and Data Strategist at Telefonica Richard Benjamins. “So if you’re an organization and you see those things, you have to act. You could say ‘My product complies with all the rules, 10% of people have damage, but 90% are enjoying it.’ But if you take a human-centered approach, not a utilitarian approach, then that 10% is still a lot of people. If you cause any harm, then you have to take that into account.”

Kagan echoes a similar sentiment, noting, “Leaders need to understand and drill down into the potential risks and see what they can do about them. We’re gonna run into similar issues to what we’re running into with other software. But now you’re running into an issue of there’s going to be off-the-shelf AI products that you can buy. Do you know what the risks are? Are you able to do anything about it?”.

#4. Take risk mitigation one step further

Beyond safeguarding their organization from potential risks, leaders at responsible organizations will take their ethical AI efforts one step further by identifying new opportunities to harness these systems to drive positive changes. As Benjamins explains, “More and more things like ESG are becoming important. So as an organization with this powerful technology, you have to think about what are the potential negative ethical impacts. But you also have to think about how you can use this technology and this data for good, to solve big societal and planetary problems.”